Project 1

Launched: Day 1

April 09, 2025

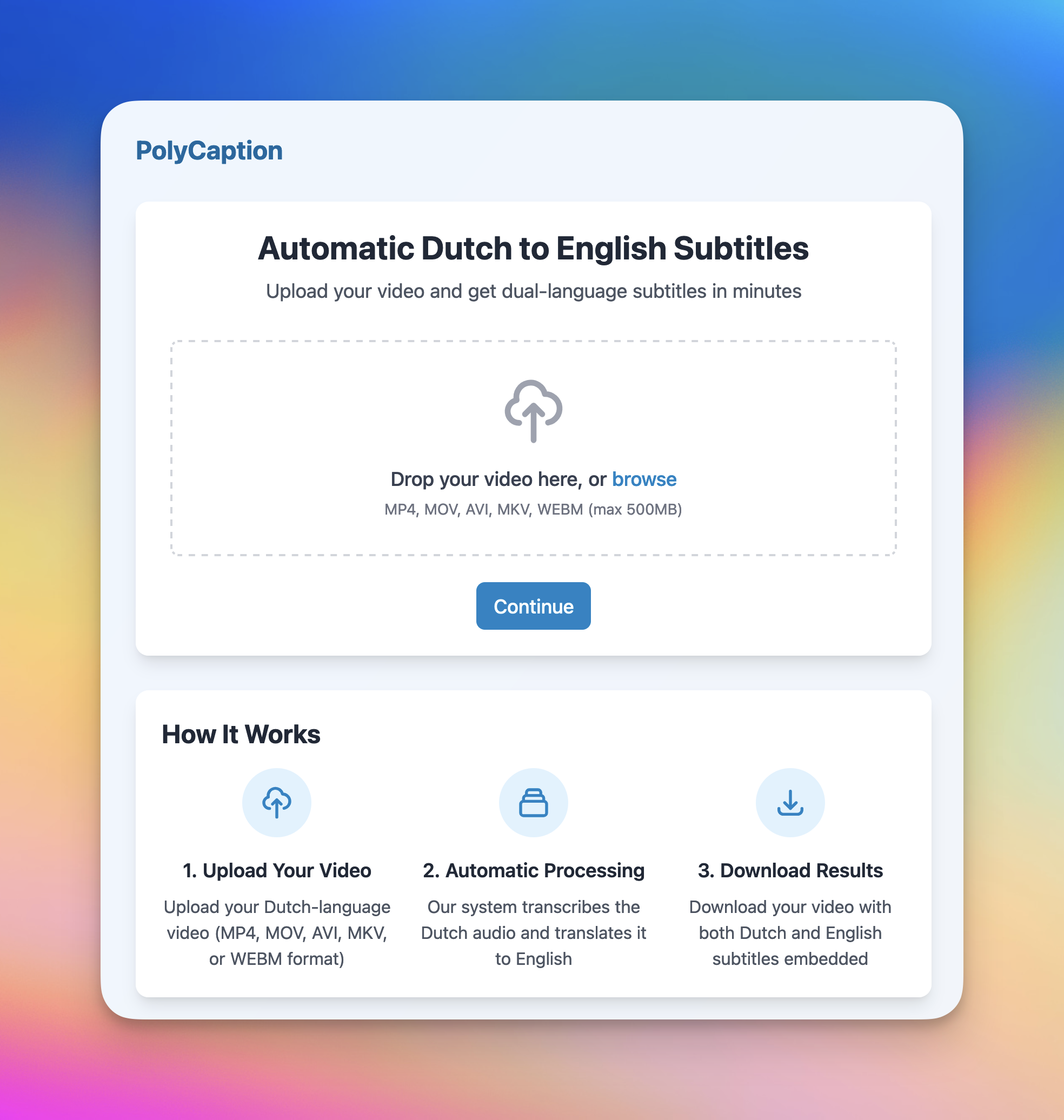

PolyCaption: dual-language video subtitles

A tool that adds dual-language subtitles to videos with support for mid-sentence language switching.

video

subtitles

language

transcription

Project Timeline

9.8h

Work Sessions

Day 3

April 11, 2025

figuring out how to speed up subtitle rendering polycaption.com

1h 50m

Day 2

April 10, 2025

Researching serverless gpu functions polycaption.com

1h 47m

Researching ffmpeg over API or alternatives polycaption.com

25m

Cleaning server memory, fixing timeout error ffmpeg polycaption.com

24m

Day 1

April 09, 2025

Setting up basic interface and upload functionality polycaption.com

2h 42m

Troubleshooting ssh login on GH Actions polycaption.com

10m

Setting up automatic deployment, setting up polycaption.com, deploying boilerplate app

2h 25m

Launch Day

Day 1Backstory

We needed this internally for videos for our Yudachi Instagram channel (@jedutchy). I wanted to simplify our editing process, and it seemed like a good idea that other people might want to use too.

What makes this tool interesting is that it supports accurate transcription when you switch languages mid-sentence, which isn't usually a feature in apps like CapCut, DaVinci Resolve, or any other editing software as far as I'm aware.

Before starting the 30-day challenge, I already had a sketch of a script ready that worked locally, and I wanted to see how it would work on the cloud. So for day one, I focused on getting the deployment pipeline set up and making the project itself live. I found a name I really liked, deployed it, and it worked pretty seamlessly. I'm very happy with how it turned out, though there are definitely performance challenges to solve.

The Challenge

Create a website where users can upload videos and receive them back with dual-language subtitles. The main technical challenge was setting up automatic deployment (CI/CD) and dealing with the resource-intensive process of rendering subtitles onto videos.

The way I develop apps is to start with the smallest server first, which makes bottlenecks very clear. Ideally, I solve these bottlenecks so I can run on an extremely lean server, but if I can't, then I note that point and upgrade when necessary.

Features

- Upload videos and get them rendered with dual-language subtitles

- Download video with burned-in subtitles ready for social media

- Support for language switching mid-sentence (unlike most editing software)

- Support for 7 languages including English, Dutch, Spanish, and German

- Burned-in subtitles that are ready to post on social media

Technical Implementation

The code is hosted on GitHub with a CI/CD pipeline. I built a Docker image that contains all the server setup including Caddy as a web server and Granian as the WSGI server. The backend is a Flask app that uses FFmpeg for rendering. DNS is managed through Cloudflare, and I'm using DigitalOcean's container registry for storing Docker images.

Monetization

The service will likely need to be monetized based on processing time or video length due to the resource-intensive nature of video rendering. A subscription model with tiered access based on minutes processed per month would make sense.

What I Learned

I knew that editing was resource intensive, but I didn't expect it to be this bad. On the smallest DigitalOcean server, rendering a 7-second video takes about 45 minutes with limited concurrency. This means only about 200 seconds of video rendering per day, which is extremely low throughput.

I also learned that having a CI/CD pipeline from the beginning removes friction to actually ship a project. This is now my first step with any new project: buy the domain, set up DNS with Cloudflare, and configure the CI/CD pipeline.

Final Thoughts

Time tracking has been satisfying - starting a project in the morning and shipping it the same day feels great. While the current rendering process is extremely slow, I plan to optimize it by either implementing client-side rendering or setting up a more powerful server with multiple CPUs to handle a rendering queue.

I spent quite some time on day two trying to get client-side rendering working with WebAssembly and ffmpeg, and while it's faster than server-side, it's still too slow and not straightforward to make it work consistently with subtitles. I think I'll finish the client-side approach, but in the meantime, I'll set up a more powerful server with 8+ vCPUs to maintain a rendering queue. Since this is a CPU-intensive task, adding more CPUs (and probably RAM) should help - perhaps later I could add GPU support for even higher throughput.

I'm excited to be doing this challenge and it feels good to ship something people can use immediately.